Our Current Philosophy

We have several projects focusing on the use of machine learning in Radiation Oncology applications. Our focus is on identifying clinically-significant problems which can be solved using state of the art machine learning algorithms, in particular deep learning.

These algorithms do well at abstracting information from images in order to learn features important for a problem, which can then be used to extract the learned features from new images. However, these algorithms today may not do so well at higher-order reasoning. For example, deep learning can be used to classify objects in an image. Modern deep learning algorithms are in fact very good at doing exactly this. In the image below, deep learning can easily recognize the children, buckets, grass, etc. However, putting this in context, humans can easily identify this image as an Easter egg hunt.

In terms of medical image analysis and use of machine learning in Radiation Oncology applications, it is important therefore to identify problems which require such contextual reasoning, and those that do not. Perhaps, until deep learning algorithms mature, it would be best to focus on the latter, and work with machine learning experts to help design new algorithms for the former.

Current Machine Learning Projects

4DCT Artifact Correction

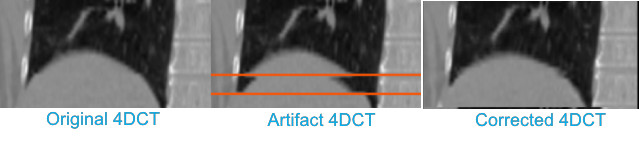

Motion-induced artifacts are present in more than 50% of clinical 4DCT scans. These residual artifacts stem from irregular breathing, selection of incorrect scan parameters (e.g., pitch too high), or changes in breathing rate during scanning. Even subtle artifacts can introduce error into delineation and IGRT processes, while gross artifact can require rescanning the patient, introducing treatment delay and additional unnecessary patient dose. We propose to develop and evaluate a retrospective method to mitigate artifact in already existing, reconstructed 4DCT images. The method requires only the reconstructed 4DCT images in DICOM format (no raw data or respiratory signal is required), which is advantageous compared to other potential solutions that require modification to the scanning system prospectively.

The following image shows a simple feasibility test of our method. Artifacts were introduced into the ‘ground truth’ image, which was a low artifact scan from the dir-lab dataset, through a simulation procedure. Our prototype method was then used to try to recover the original ground truth image. As can be seen qualitatively, the method appears to do well in this recovery.

Learning-Assisted Lung Tumor Target Definition

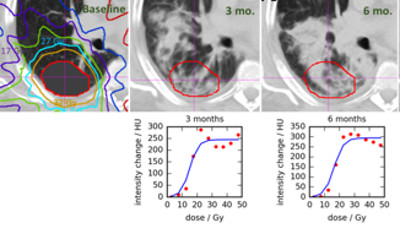

Radiographic changes following stereotactic body radiotherapy (SBRT) are associated with radiation‐induced lung injury (RILI=clinical radiation pneumonitis). Identification of predictive imaging biomarkers for RILI will allow individualized risk assessment and potentially enable prevention through individualized treatment planning or early intervention to reduce the risk of RILI in this vulnerable population. We will evaluate radiographic changes over time, including dose‐based assessment, and integrate these findings with clinical parameters to build an imaging biomarker for assessing individual RILI risk. Deformable image registration of repeated CT scans to correct for large radiation‐induced anatomical distortions of the lung and a radiomics approach with analysis of longitudinal variations of texture features will be employed to improve the power of the predictive model.

Understanding Radiation-Induced Lung Injury after SBRT.

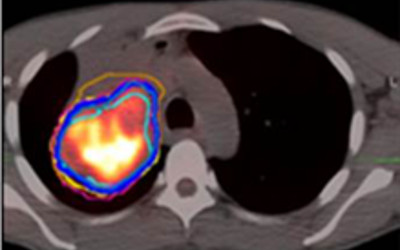

Target definition is one of the largest remaining geometric uncertainties in radiotherapy of locally-advanced lung cancer. One goal of this project is to use local texture to aid the physician in identifying the type of tumor to normal tissue interface (e.g., tumor/hilum, tumor/atelectasis), which could support assisted delineation or region-specific margins. In a second goal, image-based markers of early response of lung tumors to RT will be explored, using similar texture features. Both subprojects will explore texture features in multi-modality (MRI, CT, PET) imaging.