- Ideally a filter's role is to enhances image quality, without significantly altering the raw components of the input data

- Incorrect filtering, over/udder process the reconstruction data effecting image quality by: reducing resolution or contrast, increase noise (high frequency), increase background counts (low frequency), and can blur or over sharpen detailed information. The image may become either too grainy or too blurry

- Goal - Enhance the true counts and remove the undesirables: background and noise

- You may recall that SPECT images generally have fewer less counts, however in PET, there is a more "count rich" acquisition that applies a 128 matrix is used. When acquiring a SPECT which matrix is the most common?

- In PET, image filtering requires the conversion of spatial to the frequency domain.

- Consider the acquisition of PET data as a form of digital sampling. You are collecting only a sample of data as the image is acquired around the patient

- For the camera to resolve the incoming data many factors must be considered: sinograms, TOF, scatter/random, attenuation, attenuation correction, and count density per voxel

- That said, our focus should be on how the computer converts the spatial domain (counts) to the frequency domain. This is done with the use of Fourier Transform method (if you want to review additional material, which was covered in CLRS 322 click the link)

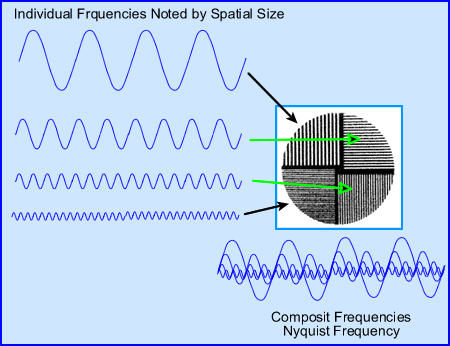

- Let us take a look at the what happens when spatial is converted to frequency In general, frequencies can be broken down into

different categories. Let us consider

- Low - background and large objects

- Middle - variation of smaller objects. As the object continue to get smaller the frequency continues to get faster and shorter

- High frequencies - small to very small objects plus noise. One cannot differentiate between smallest objects and noise because it goes beyond the resolution of the imaging system (FWHM)

- When we talk about frequencies one must also consider the Nyquist Frequency (NF)

- The formula associates the NF to pixel size determines the actual size the frequency in cycles per mm. Let us apply some numbers to see how this works.

- If we have a 7.4 mm pixel in the 64 x 64 matrix and apply it to the above NF formula then this value is 0.06868 cycles per mm-1

- So what does this mean? This means that in order capture the best spatial frequency the NF must be set at 0.0687 cycles/mm-1. To go beyond this point or an attempt to find smaller objects would not improve our resolution

- If a 128 matrix is used with a pixel size becomes 3.7 mm then the NF becomes 0.135 cycles/mm-1

- The result of a 128 matrix means more cycles per mm, better resolution

- Hence, when you reconstructing an image and applying filters with SPECT or PET data must be within its Nyquist and the user should apply the entire frequency!

- More comments on NF

- Manufacturers define NF in several different ways, two examples are cycles/mm and cycles/pixel

- The above calculations were done in cycles/mm, however, it maybe more appropriate to consider the NF in cycles/pixel

- Why? Because the maximum NF should always be 0.5 cycles/pixel. The reason for this is that it takes two pixels to capture the entire cycle.

- Therefore, whenever we filter an image in SPECT or PET it is necessary to always capture and work with the entire NF. Failure to not include all of it would result in not capturing all the data that is available for processing

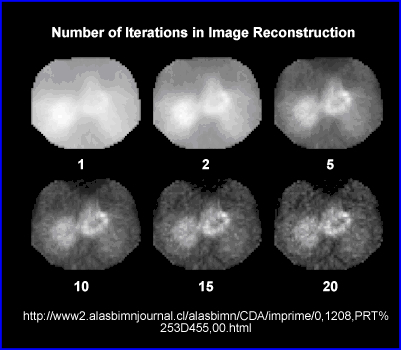

- The images above show the correct NF at 0.5 cycles/pixel, where 2 pixels capture the entire cycle. An example of over sampling is also noted when the NF is set to 1.0 cycles/pixel. What happens if over sampling occurs? (Or try to image higher frequencies) Look below!

- Any time you go beyond 0.5 cycles/pixel aliasing occurs

A - Shows a set of images taken with different amount of acquired PET slices: 16, 32, and 64. Notice the "squares" that can be seen . This Moire type defect is seen more clearly in the background of the transverse slice, but also within the brain phantom, i.e. aliasing.B - Shows a set of bars with variation in size. Aliasing, in the center of the image as noted, specifically were the bars just don't line up. This occurred because the NF was set at 1.0 cycles/pixel

Fn Nyquist Frequency D Size of Pixel

- Limited counts in nuclear medicine images always reduces the amplitude of the original image frequency. Collimation, patient to detector distance, type of acquisition orbit, Compton scatter, and the reconstruction process all effect image resolution

- The low/high frequencies issues

- Smaller objects can be captured, however, as an object continues to get smaller it will eventually blends in with noise

- The fewer the counts the greater the noise and more grainy an image will be

- Low end frequency issues might normally be considered, background and scatter, however, Poisson statistics "blurs" or "smooths" critical data in the low frequency domain, this occurs when reconstruction via Backprojection is applied. Also classified in the realm of low frequencies is the star defect

- The above graph is similar to the previous one, however, there are several differences

- The frequency curves that are displayed include: MTF of the gamma camera (black) with two separately acquired images in red

- From left to right activity on the left contains low frequencies, while that on the right contains high frequencies

- Noise level 1 represents a lower count image where noise breaks away midway from the MTF

- Noise 2 represents more counts acquired, when compared to Noise 1. Here the noise level breaks away from the MTF curve further into the higher frequency range

-

One should conclude several points:

- The more counts you acquire the better your resolution via the the ability to see higher frequencies (smaller objects)

- Once the red curve breaks away from the MTF it is impossible to tell the difference between small objects and noise

- If more counts are acquired you can push the red-line further down the MTF, improving resolution

- Pre-filter the raw data

- Many places actually pre-filter the raw data, which means that each 2-D image within the 180 or 360 degree rotation is filtered prior to reconstruction. Why?

- The rational for this is simple - If you are dealing with low count images then you're going to have a lot of high frequency noise. By completing a 9-point smooth on each 2-D image you removing excessive fluctuation in the high frequency range

- Filtering during imaging reconstruction

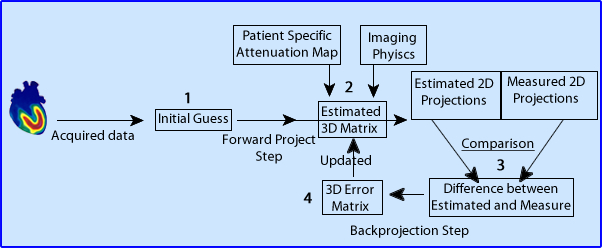

- When filtering during Backprojection and image reconstruction two points can work towards image quality

- Removing low frequency (BKG)

- Removing high frequency (noise).

- Low pass filters only allow the passing of low to mid frequencies, whereas, high frequency data is removed. Examples of these types of reconstruction filters would include Butterworth or Hanning filter

- High pass filters do the opposite. An example of this would be a Ramp filter

- Band pass filter eliminate low and high frequencies and only allow mid-range frequencies to be processed

- Restoration filters attempt to restore data and improve the quality of the image. Two examples would include Wiener and Metz

- Partial volume rendering reconstruction filters allows the user to create 3-D surface images of an organ. However, it generates the edge of the object where the activity starts to build up. on the down slide you cannot look beyond the surface. Analyzing the external anatomy of the brain might lead to finding large "hole" on the brain's exterior surface

- Post-filtering (after reconstruction) is also sometime done, especially if the end results from reconstruction contain a grainy or statistically noisy image.

- Filter the reconstruction with high and low pass filters

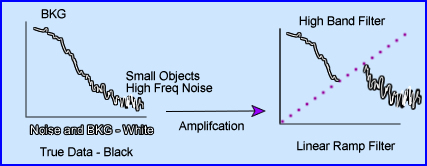

- Ramp filter (high pass) - Two issues to consider

- The main role of the linear ramp filter is to amplify the frequency to better resolve the data. However, if the entire frequency was amplified then true counts, bkg, and noise would all be amplified. This would serve no purpose

- Hence, the ramp filter cuts off the low frequency background, which removes unwanted data. This is good! Removing low frequency also removes image blurriness and the star defect

- What remains in are true counts + high frequency noise.

- So what do you do with the high frequency noise?

- Adding a low pass filter to the to reconstruction process will cutoff the noise (Keep this thought in mind)

- Filters and orders

- Some filters allow you to adjust its order. Essentially what that does is change the slope on the filter. In the case above, when you increase the order number on a Butterworth filter you increase the negative component of the slope, making it steeper

- This manipulation allows the user to remove or add difference frequencies to the reconstructed image

- Note that an order of 8 increases the amount of lower frequencies, but doesn't accept as many frequencies at the higher end. While decreasing the order number reduces the amount of lower frequencies, but bring in more high frequencies

- To accept more lower frequencies and reduce high creates a more blurry/smooth image. Doing the opposite causes a grainy/noise image

- Another element to Butterworth is the manipulation of image contrast. The steeper the slope of the curve the greater the response is to contrast, while a less negative slope has less sensitivity you are to image contrast

- Filters and cut-offs

- Another angle on filters has to do with its cut-off. The examples above show the cut-off frequency set to three different points. These MTF curves includes the same orders of 2, 4, and 8

- Setting the cut-off means that anything beyond is rejected

- Note the affects of the different cut-offs above. With a cutoff of 0.4 and an order of 6 or 8 means that you will eliminate all data past its point. Of the three cut-offs represented 0.4 would be the most acceptable. Why?

- By adjusting the cut-off we can effective eliminates high frequency noise leaving BKG and true count data. This improving the image quality via the elimination of statistical noise and making the image look more fuzzy/less grainy

- Finally, it is usually recommended that you set the cut-off to 0.5 cycles/pixel.

- Combining Ramp and Butterworth filters

- The key is to be able to remove as much of the unwanted frequencies as possible. Hence, the application of both low and high filters is required

- The application of Ramp and one of the above low frequency filters is an example of how this process should work

- In addition, there are three "globs" of data: BKG, Patient Data, and Noise

- Given the different filters above, which two combinations best removes most of the unwanted data and saves most of the true data?

- When low and high frequency filters are used together this is referred to as windowing

- Given the previous image showing four different applications of filters here are two combinations using a low and high band filters. Which of these two combinations is best suited for your filtered reconstructed data?

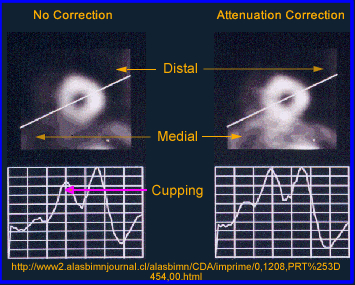

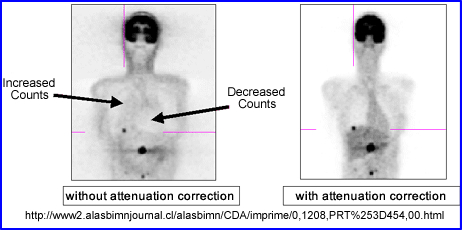

- Attenuation correction

- Filters have been designed to take attenuation into account

- The only problem with this approach is that the user can only apply this to areas of the body where the body habitus density remains relatively constant (brain and liver)

- Why should attenuation be taken into account? Remember the cupping artifact? Graphically the above example shows the affects of cupping in the LV of the myocardium. A profile is drawn through heart where decreased counts can be seen in the septal wall. Can you explain this artifact?

- The two types of attenuation filters used in nuclear medicine: Chang (applied post reconstruction) and Sorenson (applied prior to reconstruction)

- The Chang method starts by drawing an ROI around the widest portion of the transverse slice. Once the attenuation value is in place then given the filter can correct the for the lost counts with the ROI. In theory this increases counts coming from the center of the image negating the cupping artifact. Remember - the closer to the center of the brain (or any organ) the greater the gamma attenuation and the greater the need for correction

- While attenuation correct filters are sometimes used to improve image quality this process cannot be done if there are different areas of density within the FOV. At this point only a transmission source can be used to compensate for the differences in density within the imaging media. Example of this would include the myocardium and bone

- Transmission imaging can be accomplished with the use of a line source and a fan beam collimator or with an x-ray tube (CT), but in today's technology one almost always uses CT

|

|

|---|