|

Iterative Denoising

|

Overview

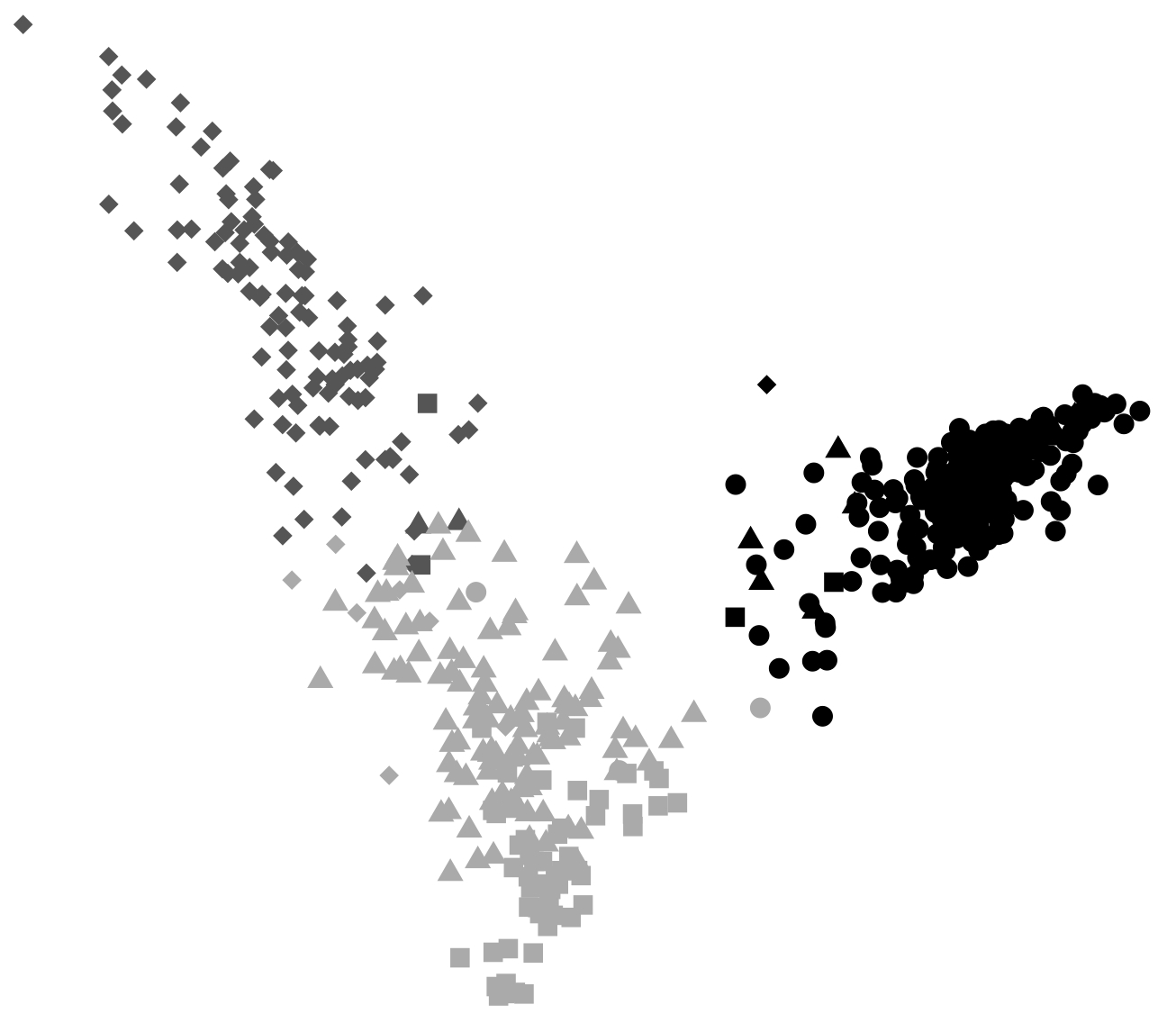

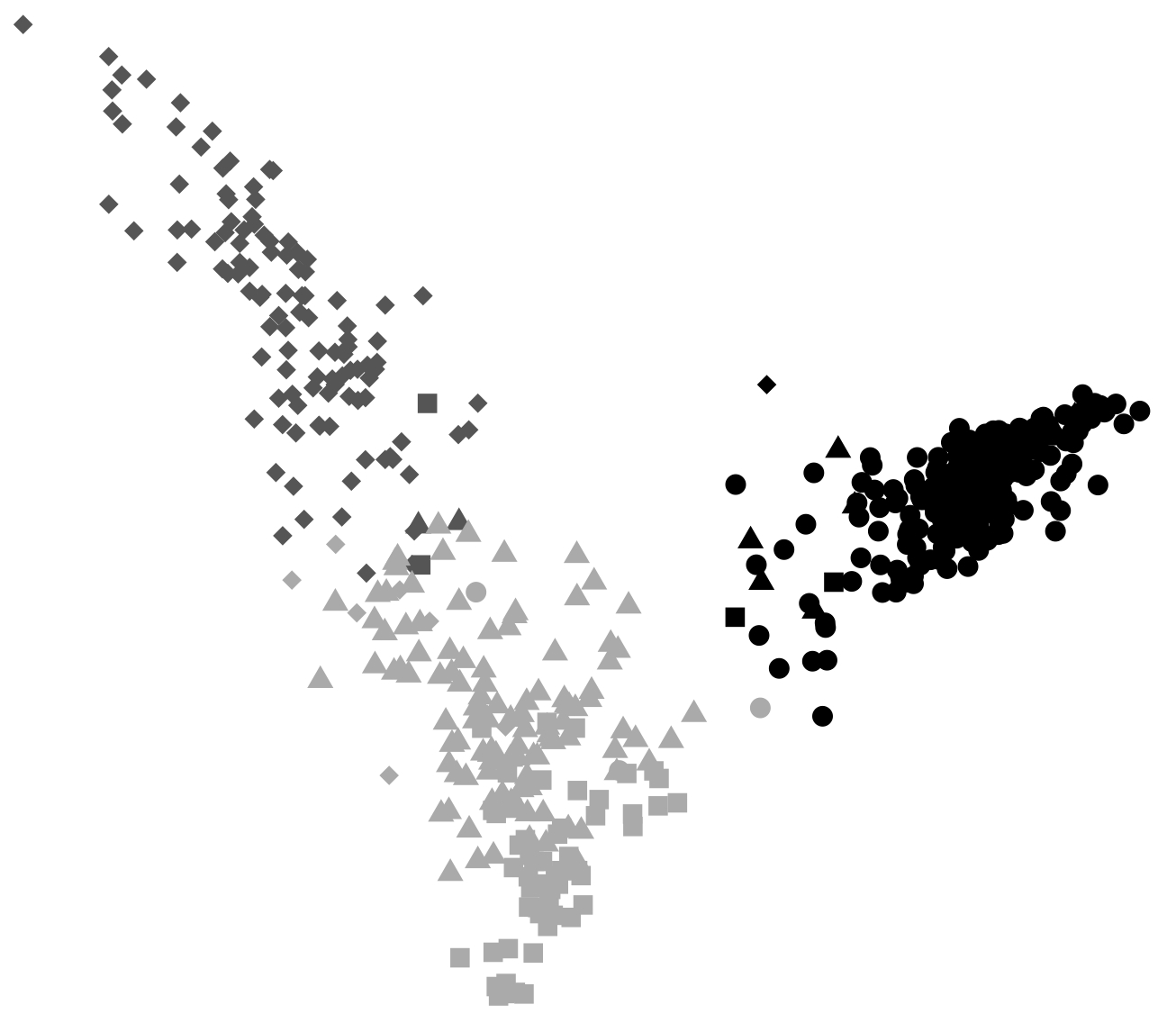

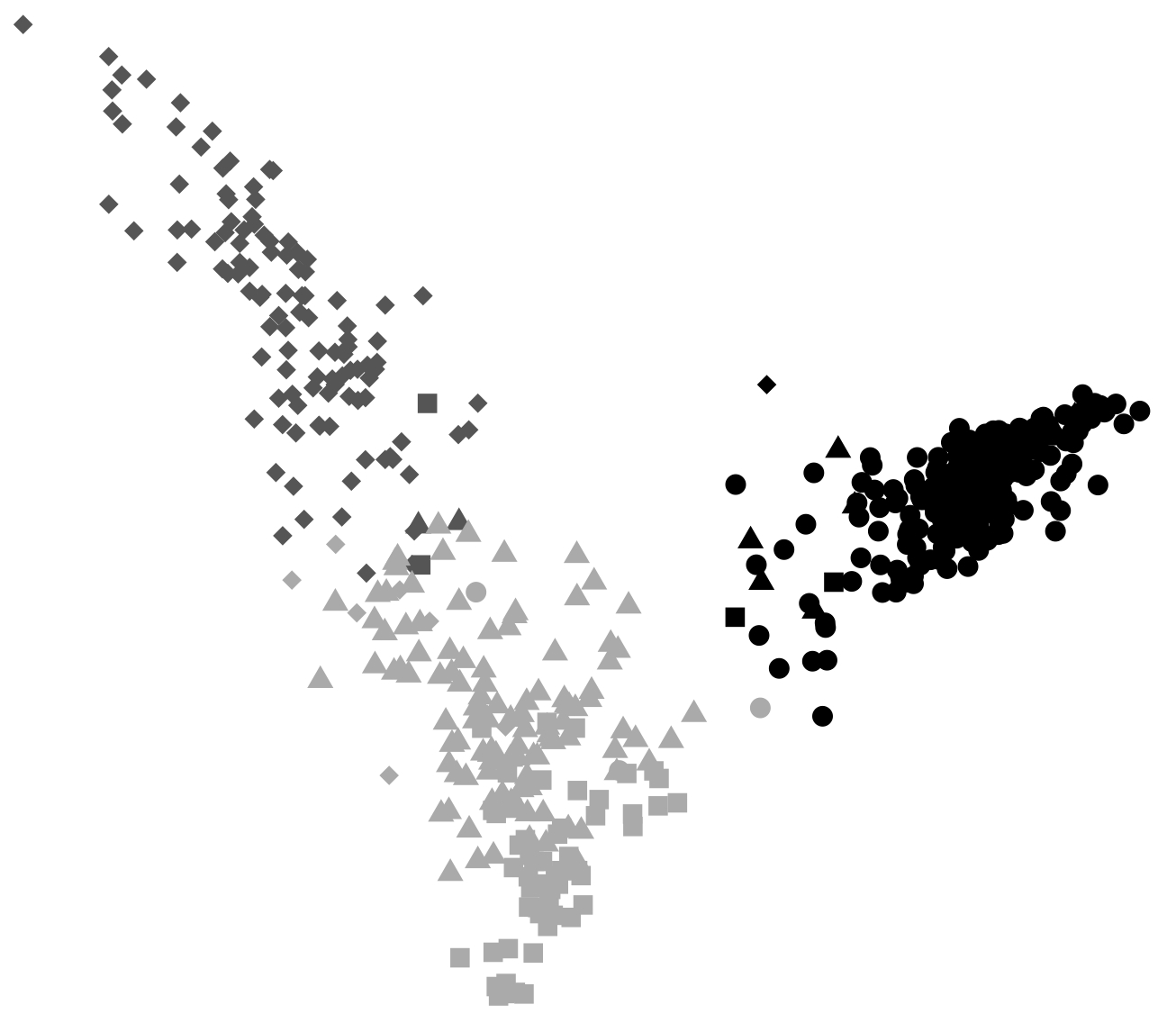

Data mining in the contexts of text and network traffic analysis is beset by multiple difficulties when the

datasets are large and in high dimension. From a performance perspective, it can be prohibitively expensive

to search in a high dimensional space. Moreover, visualizing and comprehending such spaces can be difficult

for the user. Also, complex datasets often have local relationships of interest, findings that might be missed

with global searches. Finally, an unsupervised context limits the ability of a user to analyze the corpus without

first applying some structure to the data. Traditional data mining approaches are limited due to algorithms

that make data distributional assumptions, are not scalable due to the Curse of Dimensionality, and do not

provide intuitive ways for a user to visualize high-dimensional data. Our approach to overcome these difficulties

is called Iterative

Denoising, a methodology that allows the user to explore the data and extract meaningful, implicit, and

previously-unknown information from a large unstructured corpus.

Publications

- "Iterative Denoising of Computer Network Application Traffic," Kendall Giles, David Marchette,

Carey Priebe, Don Waagen, Technical Report No. 655, Department of Applied Mathematics and

Statistics, Johns Hopkins University, Baltimore, Maryland, November 2007.

- "Iterative Denoising," Kendall Giles, Michael Trosset, David Marchette, Carey

Priebe, Computational Statistics, Accepted for Publication, September 2007.

- "Knowledge Discovery

in Computer Network Data: A Security Perspective," Kendall Giles,

Ph.D. Dissertation, Johns Hopkins University, Baltimore, Maryland, October 2006.

- "Fast Iterative Denoising," Kendall Giles, Michael Trosset, David Marchette, Carey Priebe,

Technical Report No. 653, Department of Applied Mathematics and Statistics, Johns Hopkins

University, Baltimore, Maryland, December, 2005.

- "Integrated Sensing and Processing

Decision Trees," Carey Priebe, David Marchette, and Dennis Healy, IEEE Transactions on Pattern

Analysis and Machine Intelligence, 26(6):699–708, 2004.

- "Iterative Denoising for Cross-Corpus

Discovery," Carey Priebe, David Marchette, Youngser Park, Ed Wegman, Jeff Solka,

A. Socolinsky, Damianos Karakos, K. Church, R. Guglielmi, R.

Coifman, D. Lin, Dennis Healy, M. Jacobs, and Anna Tsao, In J. Antoch, editor,

COMPSTAT: Proceedings in Computational Statistics, 16th Symposium, pages 381–392, Physica-Verlag, Springer,

2004.

Presentations

- "Knowledge Discovery with Iterative Denoising",

Computer

Science Department Seminar, Virginia Commonwealth University, Richmond, Virginia, October 8, 2008.

- "Interactive Text Analysis with Iterative Denoising,"

Interface 2008, Text Data Analysis Session, Durham, North Carolina, May 23, 2008.

- "Text Analysis with Iterative Denoising,"

International Biometric Society, Eastern North

American Region, Arlington, Virginia, 19 March 2008.

- "Iterative Denoising: A Flexible (and applicable to bioinformatics?) Knowledge Discovery

Methodology," Bioinformatics Colloquium, George Mason University, 12 February 2008.

- "Basics of Knowledge Discovery Engines," Mathematics of Knowledge and Search Engines research

program, University of California, Los Angeles, Institute for Pure and Applied Mathematics, Los

Angeles, California, September 2007.

- "Towards the Use of Iterative Denoising for Biological Data Exploration," 2007 Summer Research

Conference, Southern Regional Council on Statistics, Richmond, Virginia, June 2007.

Collaborators

Links

Disclaimer

This page does not reflect an official position of Virginia Commonwealth University.